News & Insights

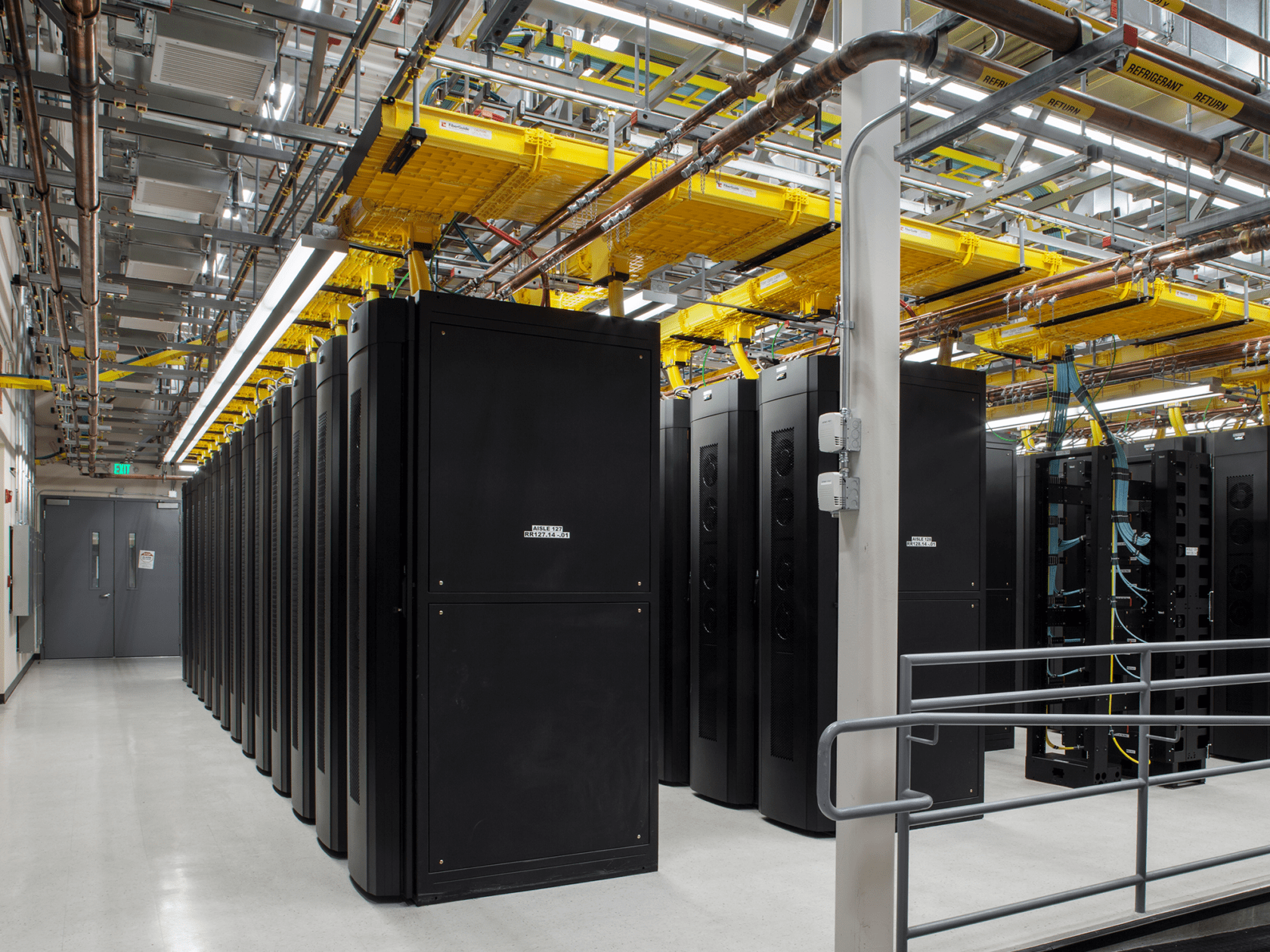

The future of data centers

Salas O'Brien shares insights on the emerging trends and the future outlook of data center needs and technology.

Quarantine changed the world’s productive landscape

Physical conference rooms gave way to virtual meeting spaces. Families and friends turned to virtual game nights, video chats, and streaming services for entertainment and socializing. Online commerce became more important than ever, and students and teachers depended on digital classrooms to continue education.

Post-quarantine, these trends continue.

As more activity shifts to digital spaces, we will need more cloud storage for our pictures, our music, our gaming, our cloud-based software, and our internet-of-things. The question is what type of data center owners and builders want to construct: hyperscale, large, medium, or 5G small.

How can we design data centers that efficiently serve the world of the future? This article explores various solutions, outlining the benefits and drawbacks of each.

Size

Before we begin discussing solutions, we need to briefly discuss two fundamental constraints for today’s data centers. Size is the first.

Regional data centers have grown from 16 megawatts (MW) to 150 MW, and the trend is continuing to upwards of 300+ MW. As demand grows, these large “hyperscale” data centers are becoming necessary to keep up with capacity. Economics are a primary driver: one large 350 MW center is less expensive than seven 50 MW centers.

However, there are a few challenges with hyperscale data centers:

- They can exceed the air permit requirements allowed by the EPA, which would require a non-contiguous campus and reduce their available emergency backup generator capacity.

- For redundant backup, another hyperscale data center would be required.

- The third challenge is location. To deliver on connectivity and speed, data centers need to have proximity to end users and to strong networks. For example, Northern Virginia is a unique internet hub where many companies choose to be located for connectivity. In 2018, the Marea undersea fiber optic cable began operating between Spain and Virginia, with transmission speeds of up to 160 terabits per second—16 million times faster than the average home internet connection.

- Larger data centers require more land availability and a local population large enough to support the required workforce to operate the data center.

Proximity

As 5G cell phone usage becomes a reality, data center needs will shift dramatically. A 5G antenna has a much shorter range than a 4G antenna. In fact, 5G will require five times as many antennas as 4G.

Urban areas are the first, and will quite possibly be the only areas, to reach the full potential of 5G speeds. Those speeds will be further compromised when users are inside buildings, because walls, windows, and ceilings degrade 5G speeds considerably.

Speed is an important factor of data usage. The closer a data center is to the user, the faster the connection is. That said, it wouldn’t be possible to have a hyperscale data center on every corner.

What can we do to mitigate the concerns and achieve results for data centers?

Solution #1: Edge data centers

Edge data centers are small data centers that are connected to larger data centers but located close to the customers they serve. Processing data closer to the customers reduces latency, increases response time, and improves the customer experience.

Cell towers near the customer point of use can support small edge and micro-edge data centers that increase connectivity for speed-sensitive operations, like financials, streaming, and online gaming.

Several companies, like EdgeMicro, are building and installing these 7-14 rack container-based data centers to enhance our collective internet experience. These edge centers (otherwise known as supernodes) will be required to increase our connectivity.

Solution #2: Colocation

When large hyperscale data center companies have a higher demand for rack space than they currently have, they lease space from a colocation facility. Colocation facilities typically have space available in their speculative data centers.

This is not a decision made lightly, as most lease commitments range from 10-15 years. We expect that the trend of using colocation centers will continue.

Our designs, third-party reviews, and commissioning efforts have helped colocation facilities run smoothly and provide the level of service that’s expected of a hyperscaler. For example, a Fortune 50 telecommunications company hired us to provide design reviews of their colocation facility. We found and corrected multiple single points of failure where the secondary power or secondary water sources would not have functioned as intended if the primary sources failed. If those points of failure had gone undetected, the outcome would have resulted in serious outages for their clients and could have potentially destroyed millions in equipment.

Solution #3: Operations and rack space

Data center operations are typically managed by a company that started out in some aspect of the data center business but developed expertise in management and operations. Each company has their own design for reliability that gets revised and refined with each new facility that is built. Where possible, these refinements are retrofitted onto previously built projects without construction or equipment modification. As described elsewhere in this white paper, more data centers will depend on containers, automation, cloud, edge computing, and other technologies that are, simply put, open-source to the core. This means Linux will not only be the champion of the cloud—it’ll rule the data center. Operating systems like CentOS 8, Red Hat, SLES, and Ubuntu are all based on or around Linux and will see a massive rise in market share by the end of 2020.

The data explosion happening now is predicted to quadruple the amount of data storage requirements in 5 years. As our demand for data storage continues to grow, solid state drives (SSD) will be the preferred data storage method to eventually replace traditional drives. SSDs are faster and more reliable, and they can store more data. The conversion to SSDs will allow the same rack space to store a much greater amount of data.

Solution #4: Containerization

Containerization is a site design method in which a large amount of equipment is packaged into large, standardized containers. Most hyperscale builders want to achieve quick time to market, with a lower cost per MW, and higher quality. These three requirements favor preloaded, standardized containers built in a factory, often near urban areas where an experienced and trained workforce is more readily available than in the remote locations of many hyperscale data centers.

In the realm of data centers, containerization allows most of the electrical construction and some level 4 functional commissioning to be done off site with reduced level 4 commissioning done on site. Level 4 commissioning involves testing each piece of critical equipment individually under varying conditions to confirm its performance. With containerization, level 5 integrated systems commissioning can occur earlier in the schedule. Level 5 commissioning involves testing the entire system or building as a whole to verify that all of the proven components work together cohesively as a system and that it complies with the intent of the design.

Another benefit of containerization is that it makes job sites safer since less construction work is required on the job site, reducing trade stacking in many areas.

Our experts have reviewed equipment layouts for containerization projects for several Fortune 500 software and telecommunications companies, ensuring efficient placement, proper clearances, and sufficient air flow.

Solution #5: Speed to market

Speed to market is second on the data center priority list right behind safety, especially since the existing data center demand far outweighs the buildings currently in construction and in design. Several methods are used to increase speed to market.

Site adaptions and containerization greatly reduce the time from breaking ground to lockdown. Modular construction, where equipment is constructed on skids in a factory, completely wired up and tested, has also increased the speed to market, similar to what McDean Electric has been doing for years.

However, even these methods are still not fast enough. For hyperscale, data centers that could previously be built in 18 months must now be made in 12, and some smaller colocation facilities must even be made in 6 months. Feeling ever-present pressure to achieve more with less, many developers go with less expensive—sometimes meaning less experienced—data center contractors and subcontractors who must go through the data center learning curve, which frequently adds time and cost to the project.

As this industry continues to mature and developers spend their money on experience, standard designs, and containerization, they will realize they can get even better speed to market with high-quality contractors and an experienced labor force for construction and commissioning. For instance, our Common-Sense Commissioning™ approach helps reduce the typical commissioning timeframe by completing the commissioning process much earlier in the construction schedule than is usual. For the top telecommunications and software clients that we serve, this approach has resulted in an earlier turnover date for the customer and a faster speed to market.

Solution #6: Multi-story

Multi-story data centers have been around for some time now, mostly in repurposed office buildings that have been converted into data centers in land-scarce cities around the world.

Due to the small footprint of these buildings, they are typically used by colocation providers. The electrical infrastructure and chillers are typically on the lower levels, while the rooftop is reserved for cooling towers.

Purpose-built multi-story data centers are being built with stairs, elevators, mechanical rooms and electrical rooms outside the building footprint, which makes the entire floor plate leasable for data center operations. In areas rich with data centers, like Ashburn in Northern Virginia, the price of land has escalated to more than $2 million per acre, which makes multi-story data center construction a necessity.

Most data center operators would prefer to stay single story, but total cost, safety, and square footage requirements often drive the need to go vertical.

Solution #7: Efficient use of power

Data centers use a lot of power, so maximizing efficiency is critical. By 2025, it is estimated that data centers will use 4.5 percent of the total energy used throughout the world.

Data center power usage effectiveness (PUE) will become even more important as more data centers are built. PUE is the number used as the efficiency benchmark, measured by the total building power divided by the IT server power. The lower the PUE, the better or more efficient the data center is. An efficient data center ranges from 1.2 to 1.3 PUE, but with the use of total immersion, water-cooled racks or high-density multi-blade servers, the PUE can be as low as 1.06 to 1.09. To achieve a low PUE, the initial infrastructure is more expensive, but the energy savings can be substantial, and compound year over year.

For example, by verifying the proper equipment operation and equipment set points in a data center for one of our software clients, we reduced the PUE from 1.5 to 1.2, resulting in an annual savings of thousands of dollars in energy and water costs.

Other factors that have reduced energy usage are the increased use of LED light fixtures on motion sensors, like the Lightway edge-lit fixture from CCI. Using modern electrical/server/rack equipment that has higher temperature and humidity ranges, some going as high as 90 degrees F and 90 percent humidity, can reduce energy use as well. The use of a higher set point allows more energy-efficient cooling in many areas.

The availability of power is a concern for the hyperscale data centers. Many large-scale operators do not leverage solar power at the site, even when rooftop space is available. In lieu of having to increase design and construction costs to reinforce the structure and roofing systems, they choose instead to buy and/or fund large-scale offsite solar projects or wind turbine projects to reduce their impact on the environment. For example, Microsoft purchased 230 MW from ENGIE in Texas in 2019, bringing Microsoft’s renewable energy portfolio to more than 1,900 MW.

Data-center-funded renewable energy projects will increase in quantity to help offset their carbon footprints. Many hyperscale operators have a goal of 100 percent renewable energy of which Google has already reached.

A look into the future of data centers

The solutions mentioned above are all highly advanced, but most are achievable today. However, with the importance of data centers to the future, innovation continues to happen at blazing speed. New products will continue to be invented, new techniques will be developed, and new requirements will emerge. Data centers of the future will likely include some or all the following:

- Total immersion of the servers into thermally conductive dielectric fluids to reduce cooling requirements. A piping infrastructure with pumps will be required but would result in fewer fans, fewer compressors, and reduced power usage.

- Quantum computers are still in the early stages of development, but they will have significant impact to the data center of the future. They will spark the development of new breakthroughs in science, AI, medications, and machine learning. They will be involved in many activities like diagnosing illnesses sooner, developing better materials to make things more efficient, and improving strategies to help us reach financial goals. The infrastructure required by quantum computers is hard to build and operate because they require temperatures near absolute zero, i.e. -459 degrees Fahrenheit. IBM Quantum has designed and built the world’s first integrated quantum computing system for commercial use, and it operates outside the walls of a research lab.

- Deep underwater data centers to use the natural cooling effects of the ocean. The size of the data centers will be limited, containerized, and very efficient with a lot of free cooling.

- Large fuel cells in parallel, like Bloom Energy, could be used to power entire buildings or smaller ones, like Ballard, may be used above IT racks to power just that individual rack. A natural gas infrastructure would be required for this innovation.

- Industrial wastewater from cooling towers could be diverted to storm sewers instead of sanitary sewers. Currently, this wastewater must be processed by the waste treatment plants. Diverting it would not only help the environment, but it could reduce costs to the project and to the local entity providing water treatment.

- Natural gas generators could reduce the level of emissions, as compared to diesel generators. This would require a natural gas infrastructure and would increase the tank runtime from a typical 72 hours to indefinite.

- Water-cooled racks and chips to meet the demands of higher density racks. The thought of bringing water inside a data center to the racks was unheard of at one time, but now it may be required. Higher density racks must be cooled with unconventional but proven and tested means. There are some very high-power, high-capacity chips, typically AI, that require water-based or liquid cooling to the actual chip. Higher education data centers, such as those at Georgia Tech, are now using rear-door cooling techniques. Water-cooled, high-powered, high-density chips, such as those by Cerebras, would raise the power required at each rack up to 60 kW. Google and Alibaba are already using water-cooled racks, with others soon to follow.

- Higher density racks like the 50 kW per rack that higher education data centers are using are becoming much more commonplace.

- Construction and design would change. Data centers have historically been built like a regular building, stick by stick onsite. Now, a lot of equipment that was stick-built, has moved to modular while modular has moved toward containerization, and many are already going containerized and scalable.

Key Takeaways

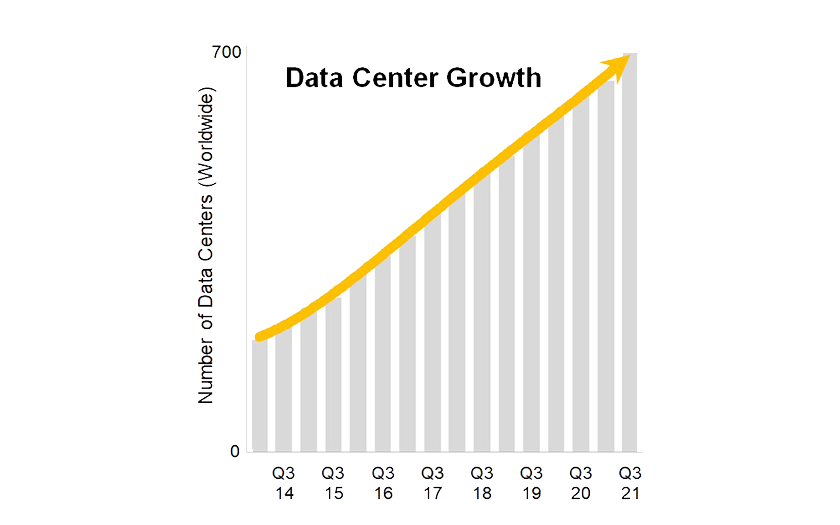

Data center construction has changed over the last two decades, and it will continue to evolve. It slowed drastically after the dot-com boom-to-bust but has grown consistently and substantially since 2010 at a rate of 15% year over year. This trend is expected to continue for the next eight-10 years.

Creating cost-effective data centers quickly so they are available for market sooner will likely be best achieved by containerization utilizing experienced engineers, contractors, sub-contractors, and commissioning agents.

Tim Brawner, PE, CxA, LEED AP, EMP

Tim Brawner is a commissioning agent with extensive experience working for large power companies and electrical contractors, as well as designing data centers as a professional engineer. He draws on this broad range of expertise to deliver high-quality commissioning services to clients at Salas O’Brien. Tim holds a Bachelor’s Degree in Electrical Engineering with a focus on Electrical Design and Commissioning. Contact him at [email protected].